Research

Fire hazards in the operation room

Fire hazard and response training in the operation room. Built in Unity with an HTC Vive as primary output.

3D registration of SPIM data

Human-in-the-loop registration of SPIM microscopy volumes with GPU acceleration.

Virtual Mosquito Exposure

Virtual timelapse of mosquito bites using the Oculus DK2 and the Leap as well as custom-built hardware

VR Motorcycle Maintence

A quick, exploratory prototype looking into training, teaching and Vive/Unity integration as well as full-body spatial interaction.

Photogrammetry

Photogrammetry for point cloud reconstruction from image data sets from handheld or airborne cameras and display using HTML5

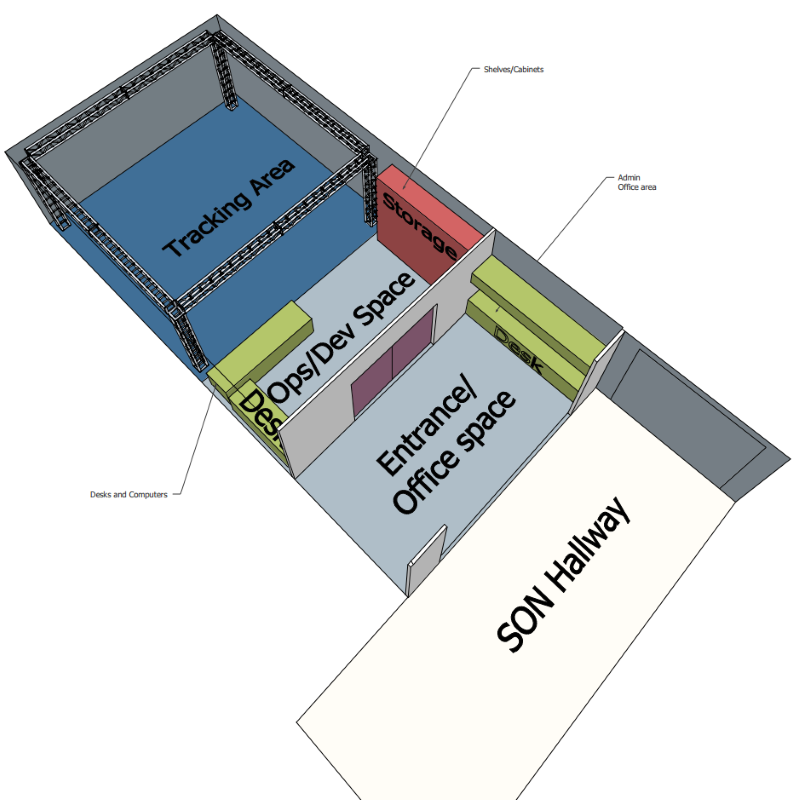

The Advanced Visualization Space

Planning and implementation of a tracking space for immersive VR visualizations and training with a focus on nursing.

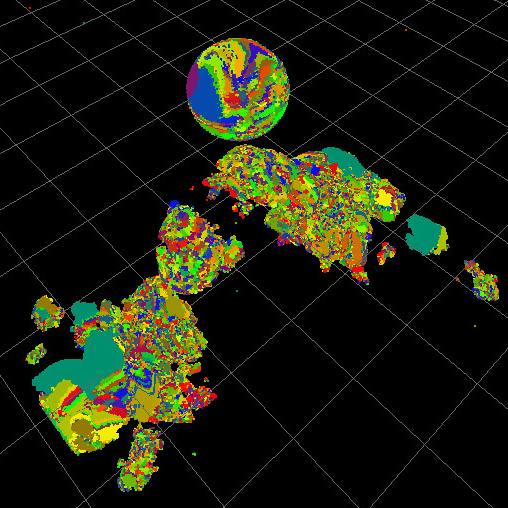

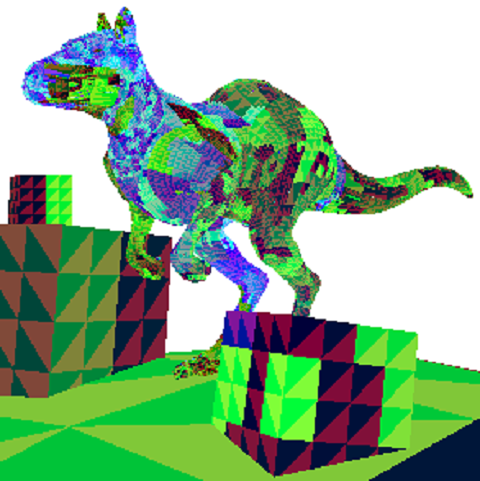

Point Cloud Compression

Compressing time-variant point cloud data by finding and describing similar motion groups over consecutive frames.

Radial Book Images

Printing on the sides of book to support art

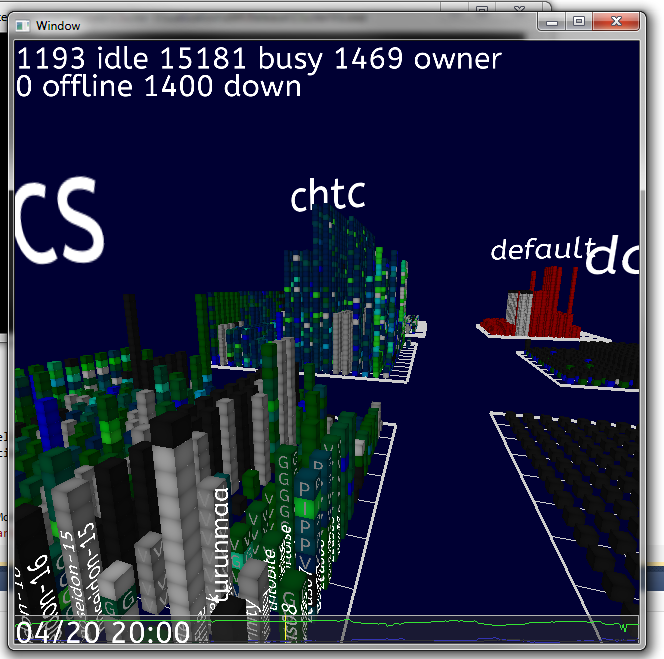

Visualization of Cluster Logs in VR

A small project to visualize usage patterns of the university's high-throughput computer systems in an immersive VR environment -- a 6-sided CAVE.

Immersive Virtual Skiing

A small game for 2014's Wisconsin Science Fest that allowed participants to virtually ski down mountains inside our six-sided CAVE.

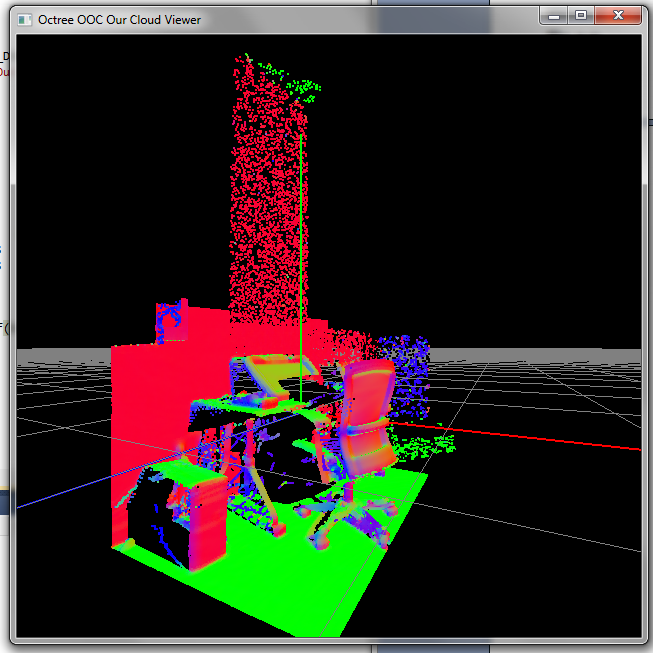

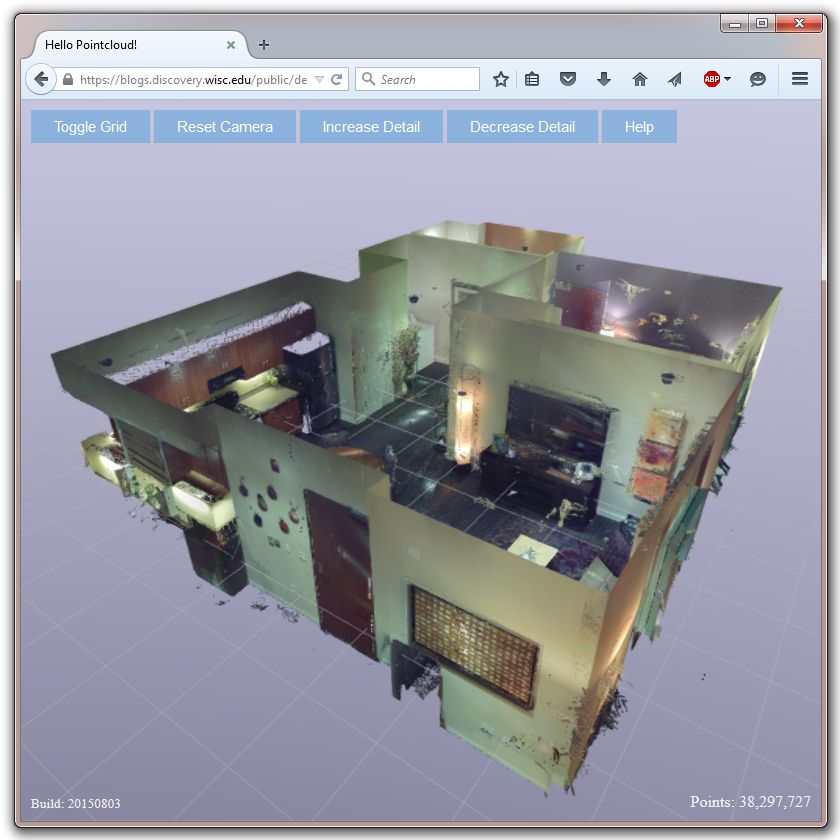

Point-based Rendering

Point cloud rendering of large data sets captured through LiDAR.

Using VR to investigate chronic neck pain

This was a collaborative effort with Ross Smith and I providing the hardware and programming and Dan Harvie and Lorimer Moseley from the Body in Mind lab at UniSA. The idea was to use the Oculus Rift with its very immersive wide field-of-view to investigate where and how pain is learned and memorised (In the muscles or the brain). To do so a method similar to redirected walking techniques is employed: the gain of participant’s head rotation is multiplied by a constant factor and the onset of pain is measured.

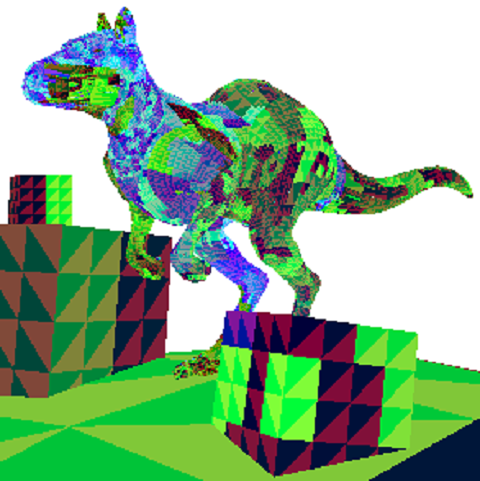

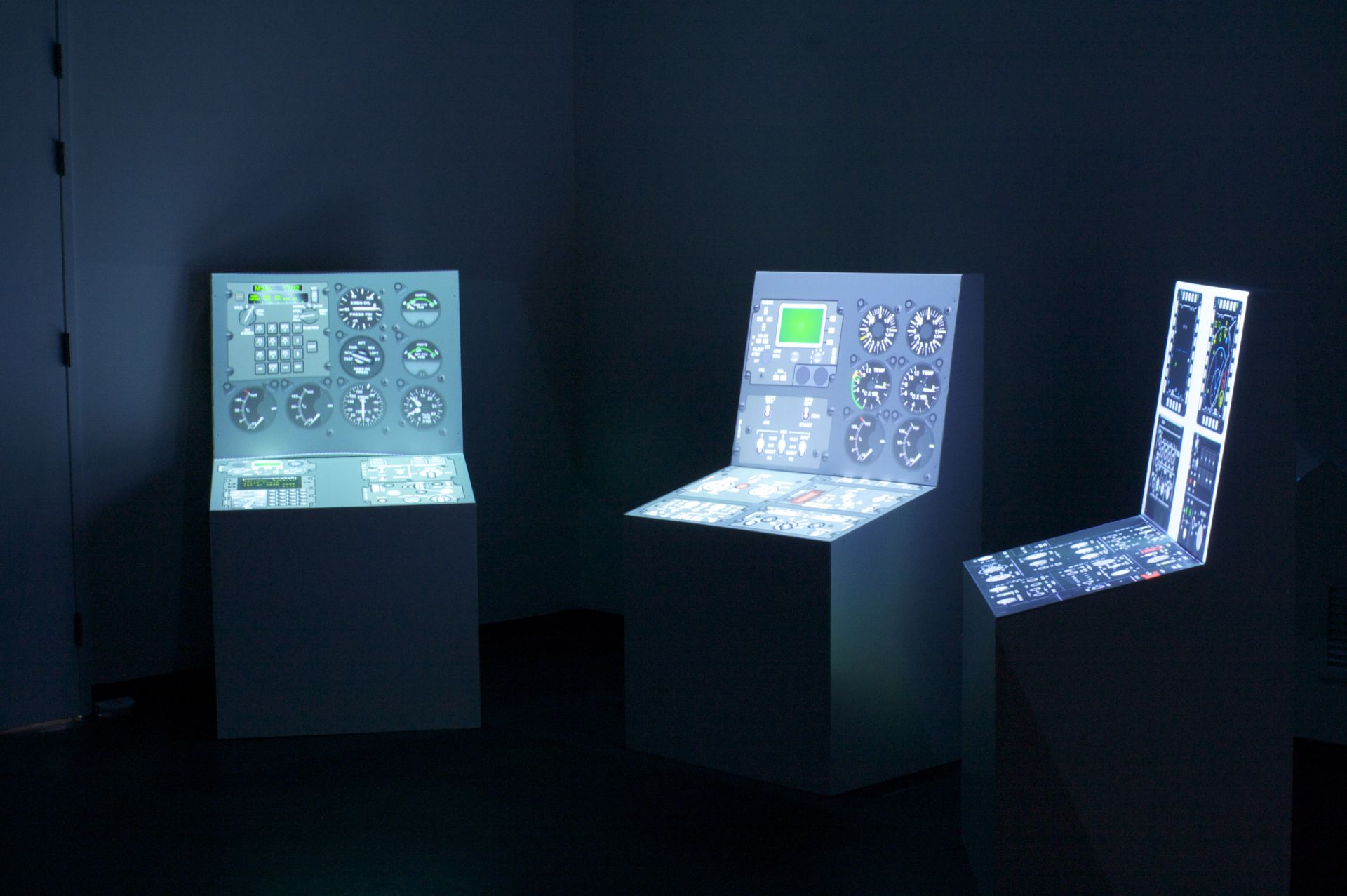

Rendering Techniques for Spatial Augmented Reality

My PhD thesis dealt with different rendering techniques for Spatial AR and what has to be changed so they can be implemented successfully in SAR.

Adapting Ray Tracing to Spatial Augmented Reality

Another project involved adapting ray tracing to Spatial AR. I used Nvidia’s Optix framework to do implement a real-time capable ray tracer. Again, the user is tracked using an Optitrack IR tracking system with a rigid body marker attached to a frame of those cheap cinema glasses with the lenses removed. Effects implemented were reflection and refractions.

View-dependent Rendering in Spatial Augmented Reality

By using head-tracking, the we can calculate a second, perspective correct, ‘virtual’ view of the scene and using projective texturing painting the surface of the prop. The end effect is similar to having cut holes into the solid surface. One application of this technique is purely virtual details on a physical prop. In the best (and most extreme) case, the physical prop is purely the bounding volume of the virtual object, while everything is displayed using View-dependent rendering techniques.

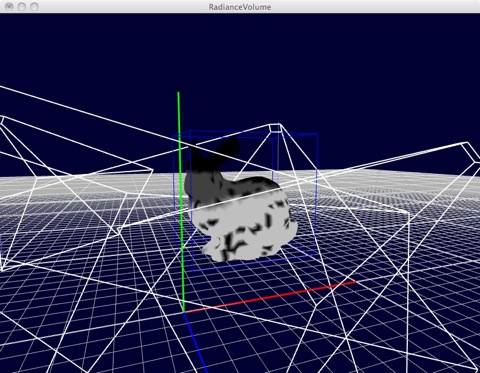

Irradiance Volumes in Spatial Augmented Reality

I tried using radiance volumes to simulate the lighting conditions and influence of different projectors on one object in SAR. If the end result was close enough to reality, it could be used to compensate for projector brightness and used for blending. Unfortunately, it did not work out.

Adaptive Substrates for Spatial Augmented Reality

Projected images lack contrast and sharpness/local resolution. We tried to overcome these problems by creating a hybrid combined display by projecting onto an eInk display.

Photorealistic Rendering in Spatial Augmented Reality

My Diplomarbeit. I implemented a ton of current-gen (at the time) game-engine features in the custom graphcis engine of our Spatial AR framework. Implemented features included HDR rendering, deferred shading, and relief mapping.

Demoscene as an art form

Demos are great and this study project aimed to differentiate them further than call them 'just' music videos with game engine tech. A focus of this project was to document and classify different types and techniques in demos and therefore enable a common stylistic language (independent of the purely technical terms) to describe and compare different demos.

The Nintendo DS as a VR input device

This was way before easy-to-program Android tablets were common (Android had not even officially been announced). The DS had a wide range of interesting input options, including a homebrew accelerometer. During this project the DS was controlling an OpenSceneGraph application (with physics) over the wifi network. All the software was written using the homebrew devkitpro toolchain.

Realtime Hybrid NURBS Ray Tracing

This was a one-semester study project which resulted in an accepted paper about the method we developed. We were working on Augenblick, a realtime raytracer that matured into a commercial application (Numenus’ RenderGin). The idea of this paper was that we could rasterize 32-bit pointers of the NURBS surfaces and use the rasterized result for a lookup for further (more expensive) NURBS intersection calculatuions.

Publications

SafeHOME: Promoting Safe Transitions to the Home.

Abstract

This paper introduces the SafeHome Simulator system, a set of immersive Virtual Reality Training tools and display systems to train patients in safe discharge procedures in captured environments of their actual houses. The aim is to lower patient readmission by significantly improving discharge planning and training. The SafeHOME Simulator is a project currently under review.

Bibtex

@article{broecker2016safehome, title={SafeHOME: Promoting Safe Transitions to the Home.}, author={Broecker, M and Ponto, K and Tredinnick, R and Casper, G and Brennan, PF}, journal={Studies in health technology and informatics}, volume={220}, pages={51}, year={2016} }

Access

Download IOS Press

Progressive Feedback Point Cloud Rendering for Virtual Reality Display

Abstract

Previous approaches to rendering large point clouds on immersive displays have generally created a trade-off between interactivity and quality. While these approaches have been quite successful for desktop environments when interaction is limited, virtual reality systems are continuously interactive, which forces users to suffer through either low frame rates or low image quality. This paper presents a novel approach to this problem through a progressive feedback-driven rendering algorithm. This algorithm uses reprojections of past views to accelerate the reconstruction of the current view. The presented method is tested against previous methods, showing improvements in both rendering quality and interactivity.

Bibtex

@INPROCEEDINGS{7504773, author={R. Tredinnick and M. Broecker and K. Ponto},booktitle={2016 IEEE Virtual Reality (VR)}, title={Progressive feedback point cloud rendering for virtual reality display}, year={2016}, pages={301-302}, keywords={Feedback loop;Geometry;Graphics processing units;Measurement;Rendering (computer graphics);Three-dimensional displays;Virtual reality;3D scanning;Point-based graphics;virtual reality}, doi={10.1109/VR.2016.7504773}, month={March},}

Access

Download Submitted paper

Methods for the Interactive Analysis and Playback of Large Body Simulations

Abstract

Simulations, such as n-body or smoothed particle hydrodynamics, create large amounts of time variant data that can generally only be visualized non-interactively by rendering the resulting interaction into movies from a fixed viewpoint. This is due to the large amount of unstructured data that make these data incapable of either loading into graphics memory or streaming from disk. The presented approach aims to preserve the interactively navigable 3D structure of the underlying data by reordering and compressing the data in such a way as to optimize playback rates. Additionally, the presented method is able to detect and describe coherent group motion between frames which might be used for analysis.

Bibtex

@INPROCEEDINGS{7500926, author={M. Broecker and K. Ponto}, booktitle={2016 IEEE Aerospace Conference}, title={Methods for the interactive analysis and playback of large body simulations}, year={2016}, pages={1-10}, keywords={Animation;Data models;Image coding;Image color analysis;Motion pictures;Octrees;Three-dimensional displays}, doi={10.1109/AERO.2016.7500926}, month={March},}

Access

Download Submitted paper

Neck Pain and Proprioception Revisited Using the Proprioception Incongruence Detection Test

Abstract

Pain is a protective perceptual response shaped by contextual, psychological, and sensory inputs that suggest danger to the body. Sensory cues suggesting that a body part is moving toward a painful position may credibly signal the threat and thereby modulate pain. In this experiment, we used virtual reality to investigate whether manipulating visual proprioceptive cues could alter movement-evoked pain in 24 people with neck pain. We hypothesized that pain would occur at a lesser degree of head rotation when visual feedback overstated true rotation and at a greater degree of rotation when visual feedback understated true rotation. Our hypothesis was clearly supported: When vision overstated the amount of rotation, pain occurred at 7% less rotation than under conditions of accurate visual feedback, and when vision understated rotation, pain occurred at 6% greater rotation than under conditions of accurate visual feedback. We concluded that visual-proprioceptive information modulated the threshold for movement-evoked pain, which suggests that stimuli that become associated with pain can themselves trigger pain.

Bibtex

@article{harvie2016neck, title={Neck pain and proprioception revisited using the proprioception incongruence detection test}, author={Harvie, Daniel S and Hillier, Susan and Madden, Victoria J and Smith, Ross T and Broecker, Markus and Meulders, Ann and Moseley, G Lorimer}, journal={Physical Therapy}, volume={96},number={5}, pages={671--678}, year={2016}, publisher={American Physical Therapy Association}}

Access

Access at sagepub.com

Experiencing Interior Environments: New Approaches for the Immersive Display of Large-Scale Point Cloud Data.

Abstract

This document introduces a new application for rendering massive LiDAR point cloud data sets of interior environments within high-resolution immersive VR display systems. Overall contributions are: to create an application which is able to visualize large-scale point clouds at interactive rates in immersive display environments, to develop a flexible pipeline for processing LiDAR data sets that allows display of both minimally processed and more rigorously processed point clouds, and to provide visualization mechanisms that produce accurate rendering of interior environments to better understand physical aspects of interior spaces. The work introduces three problems with producing accurate immersive rendering of LiDAR point cloud data sets of interiors and presents solutions to these problems. Rendering performance is compared between the developed application and a previous immersive LiDAR viewer.

Bibtex

@inproceedings{tredinnick2015experiencing, title={Experiencing interior environments: New approaches for the immersive display of large-scale point cloud data}, author={Tredinnick, Ross and Broecker, Markus and Ponto, Kevin}, booktitle={2015 IEEE Virtual Reality (VR)}, pages={297--298}, year={2015}, organization={IEEE} }

Access

Download here

Virtualizing Living and Working Spaces: Proof of Concept for a Biomedical Space-Replication Methodology

Abstract

The physical spaces within which the work of health occurs – the home, the intensive care unit, the emergency room, even the bedroom – influence the manner in which behaviors unfold, and may contribute to efficacy and effectiveness of health interventions. Yet the study of such complex workspaces is difficult. Health care environments are complex, chaotic workspaces that do not lend themselves to the typical assessment approaches used in other industrial settings. This paper provides two methodological advances for studying internal health care environments: a strategy to capture salient aspects of the physical environment and a suite of approaches to visualize and analyze that physical environment. We used a Faro™ laser scanner to obtain point cloud data sets of the internal aspects of home environments. The point cloud enables precise measurement, including the location of physical boundaries and object perimeters, color, and light, in an interior space that can be translated later for visualization on a variety of platforms. The work was motivated by vizHOME, a multi-year program to intensively examine the home context of personal health information management in a way that minimizes repeated, intrusive, and potentially disruptive in vivo assessments. Thus, we illustrate how to capture, process, display, and analyze point clouds using the home as a specific example of a health care environment. Our work presages a time when emerging technologies facilitate inexpensive capture and efficient management of point cloud data, thus enabling visual and analytical tools for enhanced discharge planning, new insights for designers of consumer-facing clinical informatics solutions, and a robust approach to context-based studies of health-related work environments.

Bibtex

@article{Brennan201553, title = "Virtualizing living and working spaces: Proof of concept for a biomedical space-replication methodology ", journal = "Journal of Biomedical Informatics ", volume = "57", number = "", pages = "53 - 61", year = "2015", issn = "1532-0464", doi = "http://dx.doi.org/10.1016/j.jbi.2015.07.007", url = "http://www.sciencedirect.com/science/article/pii/S1532046415001471", author = "Patricia Flatley Brennan and Kevin Ponto and Gail Casper and Ross Tredinnick and Markus Broecker", keywords = "Interior assessment" }

Access

Access at Science Direct/Elseview

Bogus Visual Feedback Alters Movement-Evoked Pain Threshold in People with Neck Pain

Abstract

Pain is a protective perceptual response shaped by contextual, psychological, and sensory inputs that suggest danger to the body. Sensory cues suggesting that a body part is moving toward a painful position may credibly signal the threat and thereby modulate pain. In this experiment, we used virtual reality to investigate whether manipulating visual proprioceptive cues could alter movement-evoked pain in 24 people with neck pain. We hypothesized that pain would occur at a lesser degree of head rotation when visual feedback overstated true rotation and at a greater degree of rotation when visual feedback understated true rotation. Our hypothesis was clearly supported: When vision overstated the amount of rotation, pain occurred at 7% less rotation than under conditions of accurate visual feedback, and when vision understated rotation, pain occurred at 6% greater rotation than under conditions of accurate visual feedback. We concluded that visual-proprioceptive information modulated the threshold for movement-evoked pain, which suggests that stimuli that become associated with pain can themselves trigger pain.

Bibtex

@article{harvie2015bogus, title={Bogus visual feedback alters onset of movement-evoked pain in people with neck pain}, author={Harvie, Daniel S and Broecker, Markus and Smith, Ross T and Meulders, Ann and Madden, Victoria J and Moseley, G Lorimer}, journal={Psychological science}, pages={0956797614563339}, year={2015}, publisher={SAGE Publications}}

Access

Access at Sagepub.com

Depth Perception in View-Dependent Near-Field Spatial AR

Abstract

View-dependent rendering techniques are an important tool in Spatial Augmented Reality. These allow the addition of more detail and the depiction of purely virtual geometry inside the shape of physical props. This paper investigates the impact of different depth cues onto the depth perception of users.

Bibtex

@inproceedings{broecker2014depth, title={Depth perception in view-dependent near-field spatial AR}, author={Broecker, Markus and Smith, Ross T and Thomas, Bruce H}, booktitle={Proceedings of the Fifteenth Australasian User Interface Conference-Volume 150}, pages={87--88}, year={2014}, organization={Australian Computer Society, Inc.}}

Download

Download here

Additional Material

See a video of the different depth cues on youtube.

Adapting Ray Tracing to Spatial AR

Abstract

Ray tracing is an elegant and intuitive image generation method. The introduction of GPU-accelerated ray tracing and corresponding software frameworks makes this rendering technique a viable option for Augmented Reality applications. Spatial Augmented Reality employs projectors to illuminate physical models and is used in fields that require photorealism, such as design and prototyping. Ray tracing can be used to great effect in this Augmented Reality environment to create scenes of high visual fidelity. However, the peculiarities of SAR systems require that core ray tracing algorithms be adapted to this new rendering environment. This paper highlights the problems involved in using ray tracing in a SAR environment and provides solutions to overcome them. In particular, the following issues are addressed: ray generation, hybrid rendering and view-dependent rendering.

Bibtex

@INPROCEEDINGS{6671826, author={M. Broecker and B. H. Thomas and R. T. Smith}, booktitle={Mixed and Augmented Reality (ISMAR), 2013 IEEE International Symposium on}, title={Adapting ray tracing to Spatial Augmented Reality}, year={2013}, pages={1-6}, keywords={Augmented Reality;Ray tracing;Spatial AR}, doi={10.1109/ISMAR.2013.6671826}, month={Oct}}

Download

Download here

Spatial Augmented Reality Support for Design of Complex Physical Environments

Abstract

Effective designs rarely emerge from good structural design or aesthetics alone. It is more often the result of the end product's overall design integrity. Added to this, design is inherently an interdisciplinary collaborative activity. With this in mind, today's tools are not powerful enough to design complex physical environments, such as command control centers or hospital operating theaters. This paper presents the concept of employing projector-based augmented reality techniques to enhance interdisciplinary design processes.

Bibtex

@INPROCEEDINGS{5766958, author={B. H. Thomas and G. S. Von Itzstein and R. Vernik and S. Porter and M. R. Marner and R. T. Smith and M. Broecker and B. Close and S. Walker and S. Pickersgill and S. Kelly and P. Schumacher}, booktitle={Pervasive Computing and Communications Workshops (PERCOM Workshops), 2011 IEEE International Conference on}, title={Spatial augmented reality support for design of complex physical environments}, year={2011}, pages={588-593}, keywords={CAD;augmented reality;product design;production engineering computing;design activity;interdisciplinary design process;physical environment design;product design integrity;projector-based augmented reality;spatial augmented reality;Augmented reality;Australia;Collaboration;Computers;Hospitals;Prototypes;Surface treatment;Design;Intense Collaboration;Interdisciplinary Design;Spatial Augmented Reality}, doi={10.1109/PERCOMW.2011.5766958}, month={March},}

Download

Download from r-smith.net

Large Scale Spatial Augmented Reality for Design and Prototyping

Abstract

Spatial Augmented Reality allows the appearance of physical objects to be transformed using projected light. Computer controlled light projection systems have become readily available and cost effective for both commercial and personal use. This chapter explores how large Spatial Augmented Reality systems can be applied to enhanced design mock-ups. Unlike traditional appearance altering techniques such as paints and inks, computer controlled projected light can change the color of a prototype at the touch of a button allowing many different appearances to be evaluated. We present the customized physical-virtual tools we have developed such as our hand held virtual spray painting tool that allows designers to create many customized appearances using only projected light. Finally, we also discuss design parameters of building dedicated projection environments including room layout, hardware selection and interaction considerations.

Bibtex

@book{marner2011large, title={Large Scale Spatial Augmented Reality for Design and Prototyping}, author={Marner, Michael R and Smith, Ross T and Porter, Shane R and Broecker, Markus M and Close, Benjamin and Thomas, Bruce H}, year={2011}, publisher={Springer}}

Access

Get it from Springer Link

Adaptive Substrate for Enhanced Spatial Augmented Reality Contrast and Resolution

Abstract

This poster presents the concept of combining two display technologies to enhance graphics effects in spatial augmented reality (SAR) environments. This is achieved by using an ePaper surface as an adaptive substrate instead of a white painted surface allowing the development of novel image techniques to improve image quality and object appearance in projector-based SAR environments.

Bibtex

@article{broecker2011adaptive, title={Adaptive substrate for enhanced spatial augmented reality contrast and resolution}, author={Broecker, Markus and Smith, Ross T and Thomas, Bruce H}, journal={transport}, volume={3}, number={6}, pages={22}, year={2011} }

Download

Download here

Accelerating Rendering of NURBS Surfaces by Using Hybrid Ray Tracing

Abstract

In this paper we present a new method for accelerating ray tracing of scenes containing NURBS (Non Uniform Rational B-Spline) surfaces by exploiting the GPU’s fast z-buffer rasterization for regular triangle meshes. In combination with a lightweight, memory efficient data organization this allows for fast calculation of primary ray intersections using a Newton Iteration based approach executed on the CPU. Since all employed shaders are kept simple the algorithm can profit from older graphics hardware as well. We investigate two different approaches, one initiating ray-surface intersections by referencing the surface through its child-triangles. The second approach references the surface directly and additionally delivers initial guesses, required for the Newton Iteration, using graphics hardware vector interpolation capabilities. Our approaches achieve a rendering acceleration of up to 95% for primary rays compared to full CPU ray tracing without compromising image quality.

Bibtex

@article{abert2008accelerating, title={Accelerating Rendering of NURBS Surfaces by Using Hybrid Ray Tracing}, author={Abert, Oliver and Br{\"o}cker, Markus and Spring, Rafael}, year={2008}, publisher={V{\'a}clav Skala-UNION Agency}}

Download

Download from otik.zcu.cz