Spectral Rendering on the GPU

The "Problem"

Traditional rendering uses RGB colours. While this is sufficient for most tasks (and fast and easily supported by all hardware) it has problems of its own. Spectral bases rendering, on the other hand, does not use abstract ‘colour’ values for materials or lighting, but takes measured ‘spectral reflectance’ and ‘spectral power distributions’ to perform calculations in a physically correct manner.

Implementation

Many of the data layouts used for spectral rendering are multi-dimensional -- basically one array of values for each pixel. Traditional rendering in contrast has usually only 4 values per pixel -- R,G,B and A. 1-D arrays can be easily translated to 1D textures; texture filtering also provides linear interpolation between neighbouring values, thus creating a Piecewise Linear Function from the sampled values stored in the texture. SPD textures just need to be single channel but of half or float precision. A relative low resolution of only 320 pixels allows already a sampling of the complete visible spectrum (380-700 nm) in 1 nm steps. Note that even the CIE observer is only defined in 5 nm steps, so a texture dimension of 64 pixels is more realistic.

So the main issue becomes mapping these orthogonal data storages to each other. It is no big problem for input, as we have as many as 16 texture units. We may even pack together many 1D SPD textures into a big 2D texture, in which one coordinate just designates the desired material SPD to be used. Texture arrays can be used instead on modern hardware with the same effect.

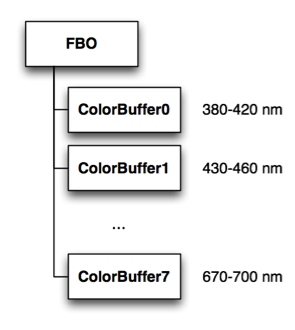

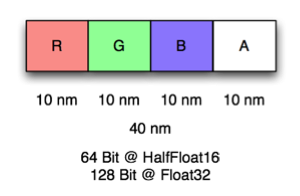

Output however is a problem. As stated earlier, only 4 channels are usually available. Multiple Render Targets (a single FBO with 8 bound colour buffers) provides 32 output channels however. The visible spectrum can then be represented when using a 10 nm sampling resolution.

Storage and bandwidth are the biggest problems here. For example a full HD render target at a Half-Float resolution would require 1920x1080x8x4x16Bit = ~1GB Memory. This is for the render target alone, without any additional textures or geometry. When required to run at 30FPS, this becomes a required bandwidth of 30 GB/s just for the output.

Multisampling and Anti-Aliasing

Multi-sampling should not be affected. Whether a colour or SPD multi-sampling and interpolation is concerned, it makes no difference as both are in the end representations of colour.

Shaders

Two shaders are needed. The first assembles a spectral FBO rendertarget, that contains final samples of the reflected spectrum at each pixel, while the second shader takes this render target collection and assembles an XYZ, tristimulus image from it. It then performs tonemapping to be able to display out-of-range intensities and finally converts the XYZ value at each point to RGB or sRGB values which are then displayed on the screen.

Shading Model

I am currently looking into the shading model. Oren-Nayar shading is used instead of Lambertian shading. I am not yet sure how to implement the final brightness. The currently most promising way looks like scaling the reflectance spectral distribution by the calculated shading term equally.

Materials have many parameters. A simplification is needed, and I am settling on having a single colour (SPD) per material, that is also independent of the BRDF. Texturing will only add prestructured noise to the shading term or change the normals (detail- or normal/bump-mapping), but not change the SPD at each texel. There are many reasons for this decision: Ease of implementation and design for this first prototype, lack of data for Spectral BRDFs and data storage problems (a spectral, surface-location dependent BRDF is a 5-dimensional function).